New study shows American Sign Language is shaped by the people who use it to make communication easier

The way we speak today isn’t the way that people talked thousands—or even hundreds—of years ago. William Shakespeare’s line, “to thine own self be true,” is today’s “be yourself.” New speakers, ideas, and technologies all seem to play a role in shifting the ways we communicate with each other, but linguists don’t always agree on how and why languages change. Now, a new study of American Sign Language adds support to one potential reason: Sometimes, we just want to make our lives a little easier.

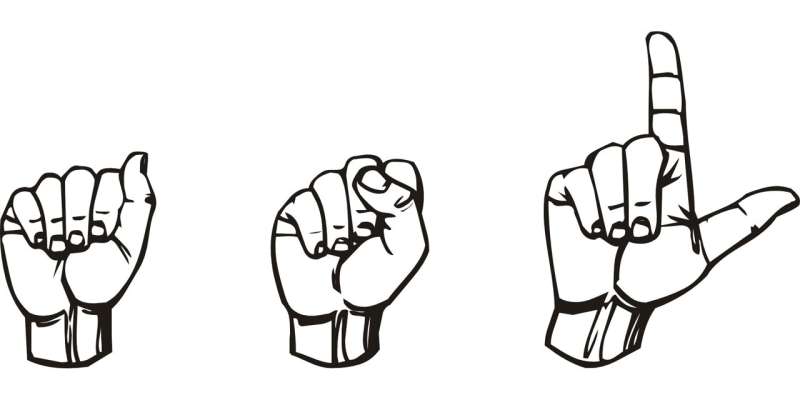

Deaf studies scholar Naomi Caselli and a team of researchers found that American Sign Language (ASL) signs that are challenging to perceive—those that are rare or have uncommon handshapes—are made closer to the signer’s face, where people often look during sign perception. By contrast, common ones, and those with more routine handshapes, are made further away from the face, in the perceiver’s peripheral vision. Caselli, a Boston University (BU) Wheelock College of Education & Human Development assistant professor, says the findings suggest that ASL has evolved to be easier for people to recognize signs. The results were published in Cognition.

“Every time we use a word, it changes just a little bit,” says Caselli, who’s also codirector of the BU Rafik B. Hariri Institute for Computing and Computational Science & Engineering’s AI and Education Initiative. “Over long periods of time, words with uncommon handshapes have evolved to be produced closer to the face, and therefore, are easier for the perceiver to see and recognize.”

Although studying the evolution of language is complex, says Caselli, “you can make predictions about how languages might change over time, and test those predictions with a current snapshot of the language.”

With researchers from Syracuse University and Rochester Institute of Technology, she looked at the evolution of ASL with help from an artificial intelligence (AI) tool that analyzed videos of more than 2,500 signs from ASL-LEX, the world’s largest interactive ASL database. Caselli says they began by using the AI algorithm to estimate the position of the signer’s body and limbs.

“We feed the video into a machine learning algorithm that uses computer vision to figure out where key points on the body are,” says Caselli. “We can then figure out where the hands are relative to the face in each sign.” The researchers then match that with data from ASL-LEX—which was created with help from the Hariri Institute’s Software & Application Innovation Lab—about how often the signs and handshapes are used. They found, for example, that many signs that use common handshapes, such as the sign for children—which uses a flat, open hand—are produced further from the face than signs that use rare handshapes, like the one for light.

This project is part of a new and growing body of work connecting computing and sign language at BU.

“The team behind these projects is dynamic, with signing researchers working in collaboration with computer vision scientists,” says Lauren Berger, a Deaf scientist and postdoctoral fellow at BU who works on computational approaches to sign language research. “Our varying perspectives, anchored by the oversight of researchers who are sensitive to Deaf culture, helps prevent cultural and language exploitation just for the sake of pushing forward the cutting edge of technology and science.”

Understanding how sign languages work can help improve Deaf education, says Caselli, who hopes the latest findings also bring attention to the diversity in human languages and the extraordinary capabilities of the human mind.

“If all we study is spoken languages, it is hard to tease apart the things that are about language in general from the things that are particular to the auditory-oral modality. Sign languages offer a neat opportunity to learn about how all languages work,” she says. “Now with AI, we can manipulate large quantities of sign language videos and actually test these questions empirically.”

Naomi Caselli et al, Perceptual optimization of language: Evidence from American Sign Language, Cognition (2022). DOI: 10.1016/j.cognition.2022.105040

Citation:

New study shows American Sign Language is shaped by the people who use it to make communication easier (2022, March 29)

retrieved 30 March 2022

from https://phys.org/news/2022-03-american-language-people-easier.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

For all the latest Science News Click Here

For the latest news and updates, follow us on Google News.