How new tech enhances sports analytics for athletes and fans alike

The 2022 World Cup ushered in a new era, making the tournament one of the most technologically-advanced sporting spectacles in the world.

It’s all in the ball, for the most part. For the first time, all World Cup matches in Qatar have players kicking around a ball equipped with two sensors capable of delivering precise data that not only impacts the professionals on the pitch, but eventually spectators and amateurs as well. If you’ve been watching the drama unfold, you probably caught on to how exact the VAR (video assistant referees) have been throughout.

So precise, in fact, officials now see offsides as close as an attacking player’s leaning shoulder or single foot ahead of the last defender. Several goals were disallowed because of it, The combination of tech on display portends a future where analytics become a more exact science fans and amateur players or coaches may utilize, too.

A smarter soccer pitch

The tech itself isn’t entirely new. The Hawk-Eye optical camera systems pulling this off in Qatar are the same ones used in the pro tennis circuit to help confirm if any part of the ball hit a line or not. It was this system that confirmed Canada didn’t score a tying goal against Morocco in its last group stage game when Atiba Hutchinson’s header banked off the crossbar and hit part of the goal line. It literally was a game of inches in that case.

The company behind this is Kinexon, which partnered with FIFA to set all this up, having already proven the system’s potential in the German Bundesliga, where teams like Bayer Leverkusen and RB Leipzig used the technology for practice. Both clubs wanted to improve ball possession and passing, as well as work on set pieces, like free and corner kicks, to better understand how players were actually kicking the ball.

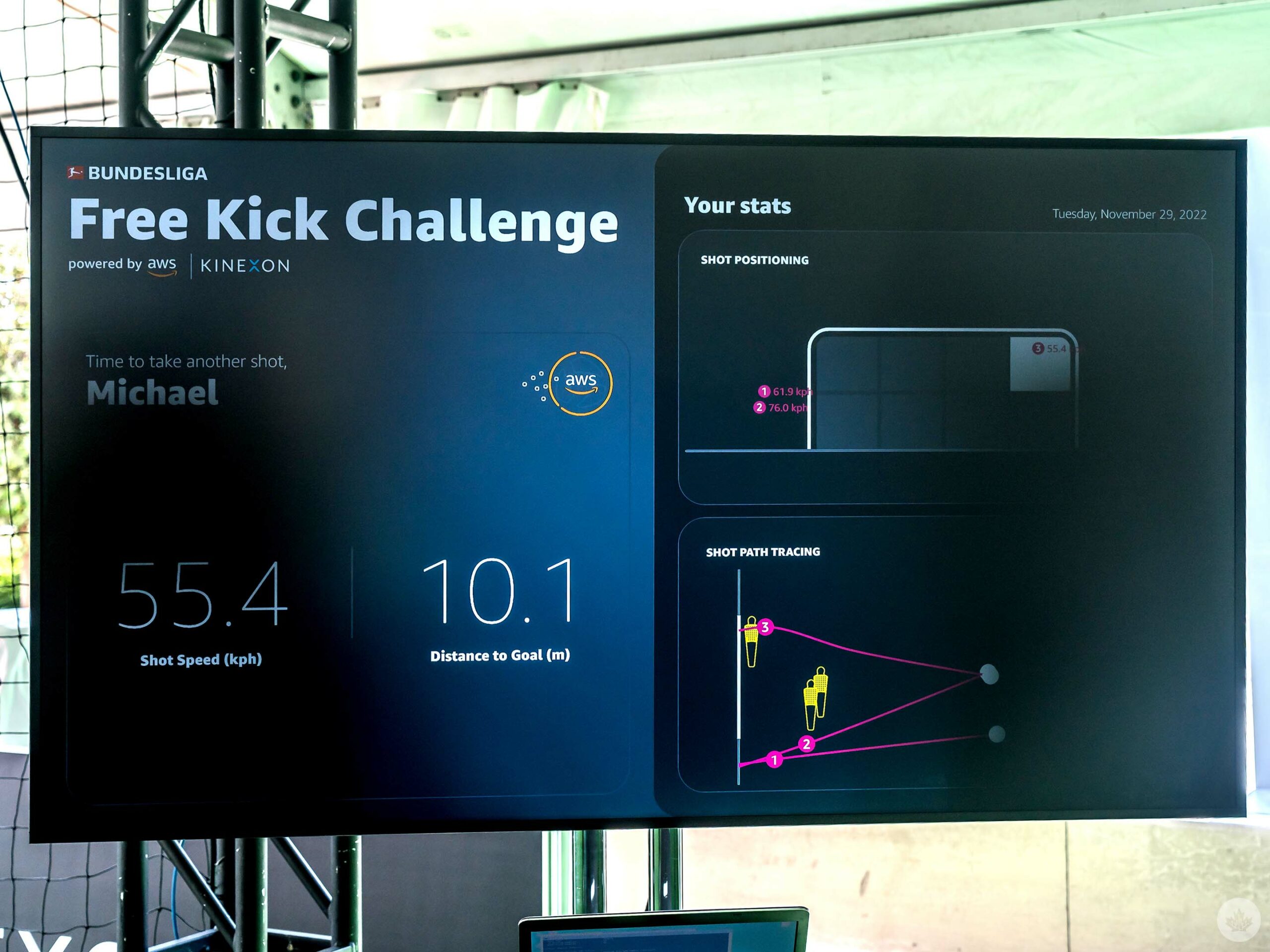

I got to try this myself at Amazon’s AWS re:Invent conference in Las Vegas, albeit in a restricted space roughly the size of the 20-yard penalty area. I was given three kicks where I needed to hit the net in certain spots without yellow stands (acting as defenders) blocking them. Once done, I could see my results on the TV screen nearby, indicating where the ball went, including its velocity and trajectory. Kinexon’s sensors can track ball movement at 500 frames per second, sending the data to the optical cameras for the metrics available onscreen. That sensor sits in the middle of the ball, suspended by technology Adidas developed for just such a purpose.

I got to try this myself at Amazon’s AWS re:Invent conference in Las Vegas, albeit in a restricted space roughly the size of the 20-yard penalty area. I was given three kicks where I needed to hit the net in certain spots without yellow stands (acting as defenders) blocking them. Once done, I could see my results on the TV screen nearby, indicating where the ball went, including its velocity and trajectory. Kinexon’s sensors can track ball movement at 500 frames per second, sending the data to the optical cameras for the metrics available onscreen. That sensor sits in the middle of the ball, suspended by technology Adidas developed for just such a purpose.

Teams and coaches using the technology can see 3D images based on what happened, just like the renderings we see during broadcasts for those close offsides, for instance. What’s interesting, at least for the sake of accuracy, is the data transmits at lightning speed using AWS — 500Hz — 10 times faster than your average video.

To prove the system works, nations playing friendly matches prior to the tournament were given both balls with sensors inside, as well as regular ones without, to gauge whether players would notice any differences in weight or feel. None reportedly did, and so, the game was on with the system ready to roll.

On the basketball court

The AWS re:Invent show floor also had a similar setup for basketball, where sensors in a ball can help take better shots, starting with the free throw line. I tried this out twice to see how it went, and while I shot far worse than I normally would, I didn’t notice anything different about the balls. Same weight, same bounce.

It collected interesting data, like the arch of the shot, height, speed and my positioning when letting it go. That last part is the most intriguing because it essentially allows coaches and players to understand what might be going wrong with a shooter’s mechanics beyond a simple eye test. Did the player bend their elbow too little or too much. Did the makes look different in height or speed relative to the misses?

AWS offered a glimpse of what’s possible under a system like this, which works a lot like Kinexon’s. The New York Knicks and Philadelphia 76ers apparently use tech like it during practice, though it’s not clear exactly how or what they wanted to focus on for this season — or even if every player participates.

AWS offered a glimpse of what’s possible under a system like this, which works a lot like Kinexon’s. The New York Knicks and Philadelphia 76ers apparently use tech like it during practice, though it’s not clear exactly how or what they wanted to focus on for this season — or even if every player participates.

Smart basketballs have hit the market before, with Wilson, 94Fifty and SIQ all trying it, among others. I’ve even tested DribbleUp’s first smart ball, so have experienced how mobile devices and apps can integrate with sporting goods. Those aren’t as precise or intricate as “systems” go, but as this kind of tech converges — and the smart balls feel just as natural — they could grow in popularity as practice tools.

The NFL gets on board

That’s a lot like how Larry Fitzgerald, former wide receiver for the NFL’s Arizona Cardinals, sees it. Since his final game in 2020, the 11-time Pro Bowler has been an outspoken advocate for player safety, crediting sensory technology for extending his career to 17 years.

“When you’re young and super athletic, you can do everything physically and don’t have to take that stuff into consideration, but as you start getting older, you have to do more to be able to maintain your job and continue to play,” says Fitzgerald in an interview. “(The Cardinals) first put sensors in our pads that we never knew were there. I tried practicing with a high ankle sprain in my last year, and the trainer comes up to me and says, ‘you’re pushing off your right ankle 30% less than you were the same time last week. It makes no sense for you to be out here’. They shut me down right then to see how I felt the next day.”

Had it been five years earlier, Fitzgerald says he would’ve “pushed through” the injury in practice. Doing so may have threatened further injury to his hamstring, possibly taking him out of the lineup for several weeks. Playing through pain during a game is one thing, but not so much during practice, and so he rested to play the next game.

Every NFL team has the option to access these kinds of analytics, and players can choose to opt out using pads and equipment equipped with sensors during regular season and playoff games. Only the NFL can see the data in real time as a means to reduce injuries. On the day after the week’s games are done, the league shares that data with respective teams to help them gauge the metrics for themselves.

Every NFL team has the option to access these kinds of analytics, and players can choose to opt out using pads and equipment equipped with sensors during regular season and playoff games. Only the NFL can see the data in real time as a means to reduce injuries. On the day after the week’s games are done, the league shares that data with respective teams to help them gauge the metrics for themselves.

“It gives you a competitive advantage if you have (training staff) who takes the onus on things like that,” he says. “The league can’t tell you what helmet or pads to wear, but we would get independent brand reps come in to show us new gear. We would learn which cleats get out of the ground at a higher rate to save from blowing a knee out.”

Data like that also spurred rule changes that may have helped reduce injuries in certain situations. Kickoffs are now five yards closer to the opposing team’s end zone, which led to an increasing number of touchbacks, where the receiving team doesn’t have to run the ball back. The league found that 30 percent of all injuries came from kickoff returns, which only make up 4 percent of the plays in an entire game. Reduce the number of 240-pound men running full steam crashing into each other, the less chance of someone getting a concussion or broken limb, he adds.

It’s not clear if or when the NFL will eventually allow teams to use data like that during games. Fitzgerald cites competitive advantages for teams who would, given not all players currently wear sensor-based equipment.

Spectators and players

Analytics continue to play a bigger role in recruitment, drafting and player development, but much of the data is still under wraps. As smarter equipment comes to market for the everyday amateur, including children learning to play, data may be a factor for parents. I didn’t see anything on the show floor specifically talking about that, but that may change in future tech or sports conferences.

With sports betting now fully legal in Canada, data could also figure into the betting line somehow. The World Cup was an ideal tournament to test interactive features through apps that could tie into some of what happens on the pitch in ways never seen before. Broadcasters focus on the key plays, but what if there was a way to tap into the ball’s data for pretty much any play that happens? Baseball does this with pitch speed or home run distance. Hockey could theoretically do the same with shots or passes, now that sensors in pucks are commonplace.

Sports junkies looking for more insights may appreciate that kind of granular control, much like younger kids could try emulating how a superstar plays by knowing more about how they actually do it while playing.

Kinexon sees this kind of data as both improving performance and keeping the game safer and honest. We’ll have to see how the games people love change the more they embrace sophisticated tech like this.

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.