Harnessing machine learning to make nanosystems more energy efficient

Getting something for nothing doesn’t work in physics. But it turns out that, by thinking like a strategic gamer, and with some help from a demon, improved energy efficiency for complex systems like data centers might be possible.

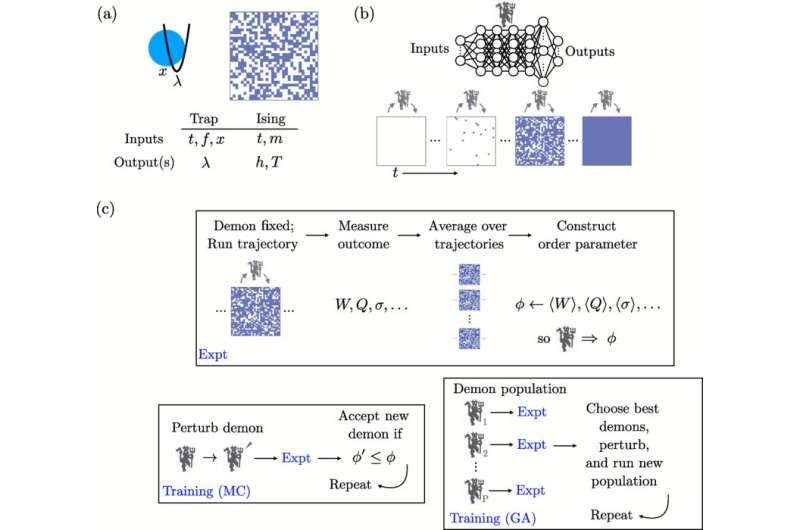

In computer simulations, Stephen Whitelam of the Department of Energy’s Lawrence Berkeley National Laboratory (Berkeley Lab) used neural networks (a type of machine learning model that mimics human brain processes) to train nanosystems, which are tiny machines about the size of molecules, to work with greater energy efficiency.

What’s more, the simulations showed that learned protocols could draw heat from the systems by virtue of constantly measuring them to find the most energy efficient operations.

“We can get energy out of the system, or we can store work in the system,” Whitelam said.

It’s an insight that could prove valuable, for example, in operating very large systems like computer data centers. Banks of computers produce enormous amounts of heat that must be extracted—using still more energy—to prevent damage to the sensitive electronics.

Whitelam conducted the research at the Molecular Foundry, a DOE Office of Science user facility at Berkeley Lab. His work is described in a paper published in Physical Review X.

Inspiration from Pac Man and Maxwell’s Demon

Asked about the origin of his ideas, Whitelam said, “People had used techniques in the machine learning literature to play Atari video games that seemed naturally suited to materials science.”

In a video game like Pac Man, he explained, the aim with machine learning would be to choose a particular time for an action—up, down, left, right, and so on—to be performed. Over time, the machine learning algorithms will “learn” the best moves to make, and when, to achieve high scores. The same algorithms can work for nanoscale systems.

Whitelam’s simulations are also something of an answer to an old thought experiment in physics called Maxwell’s Demon. Briefly, in 1867, physicist James Clerk Maxwell proposed a box filled with a gas, and in the middle of the box there would be a massless “demon” controlling a trap door. The demon would open the door to allow faster molecules of the gas to move to one side of the box and slower molecules to the opposite side.

Eventually, with all molecules so segregated, the “slow” side of the box would be cold and the “fast side” would be hot, matching the energy of the molecules.

Checking the refrigerator

The system would constitute a heat engine, Whitelam said. Importantly, however, Maxwell’s Demon doesn’t violate the laws of thermodynamics—getting something for nothing—because information is equivalent to energy. Measuring the position and speed of molecules in the box costs more energy than that derived from the resulting heat engine.

And heat engines can be useful things. Refrigerators provide a good analogy, Whitelam said. As the system runs, food inside stays cold—the desired outcome—even though the back of the fridge gets hot as a product of work done by the refrigerator’s motor.

In Whitelam’s simulations, the machine learning protocol can be thought of as the demon. In the process of optimization, it converts information drawn from the system modeled into energy as heat.

Unleashing the demon on a nanoscale system

In one simulation, Whitelam optimized the process of dragging a nanoscale bead through water. He modeled a so-called optical trap in which laser beams, acting like tweezers of light, can hold and move a bead around.

“The name of the game is: Go from here to there with as little work done on the system as possible,” Whitelam said. The bead jiggles under natural fluctuations called Brownian motion as water molecules are bombarding it. Whitelam showed that if these fluctuations can be measured, moving the bead can then be done at the most energy efficient moment.

“Here we’re showing that we can train a neural-network demon to do something similar to Maxwell’s thought experiment but with an optical trap,” he said.

Cooling computers

Whitelam extended the idea to microelectronics and computation. He used the machine learning protocol to simulate flipping the state of a nanomagnetic bit between 0 and 1, which is a basic information-erasure/information-copying operation in computing.

“Do this again, and again. Eventually, your demon will ‘learn’ how to flip the bit so as to absorb heat from the surroundings,” he said. He comes back to the refrigerator analogy. “You could make a computer that cools down as it runs, with the heat being sent somewhere else in your data center.”

Whitelam said the simulations are like a testbed for understanding concepts and ideas. “And here the idea is just showing that you can perform these protocols, either with little energy expense, or energy sucked in at the cost of going somewhere else, using measurements that could apply in a real-life experiment,” he said.

More information:

Stephen Whitelam, Demon in the Machine: Learning to Extract Work and Absorb Entropy from Fluctuating Nanosystems, Physical Review X (2023). DOI: 10.1103/PhysRevX.13.021005

Citation:

Harnessing machine learning to make nanosystems more energy efficient (2023, May 12)

retrieved 13 May 2023

from https://phys.org/news/2023-05-harnessing-machine-nanosystems-energy-efficient.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

For all the latest Science News Click Here

For the latest news and updates, follow us on Google News.