Geekbench 6 interview: It’s a big improvement over its predecessor

We had the opportunity to talk to John Poole, the founder of Primate Labs, which is the company behind the popular Geekbench benchmarking tool. We talked about Geekbench 6, the latest version of the software that was recently announced. Poole explained what makes it different from its predecessor and whether its scores are comparable to those of previous versions.

He also shared details on why he created Geekbench in the first place, the problems he saw in other benchmarking tools he used in the past, and much more. You can read a brief overview of the interview below or check out the whole thing in the video above.

Q: How did you come up with the idea for Geekbench, and what problem did you want to solve with it?

A: It all started back in 2003 when I switched from a PC to a Mac with a G5 system, which was the first 64-bit computer. I ran a lot of tests on it and found that it wasn’t that much faster. I was a bit confused, so I downloaded a few popular Mac benchmarks available at the time to see if it was a problem with my system.

The benchmarks said that the G5 is faster and on par with all the other G5s out there, which seemed strange to me. So I decided to reverse engineer one of the popular benchmarks and found that the tests were very small and synthetic. They were doing very simple tasks that weren’t a good measure of overall performance. They were just focused on how fast your processor ran and didn’t take anything else into account like memory, for example.

I then decided to write my own tests and see what would happen. It was a side project of mine that I worked on for about three years. Then, in 2016, the first version of Geekbench was released as a free download.

We got a lot of great feedback from people at the time, which helped us grow into the business we are today, providing benchmarks for millions of users each month.

Q: How has the company grown since the first release of Geekbench? You’re likely not working on the software alone anymore?

Robert Triggs / Android Authority

A: We now have a small but mighty team here in Canada, and we mainly work remotely, especially after the pandemic. The entire team is located in Ontario, with most people being from Toronto.

We have people working in a variety of different roles, with some working on the benchmark itself, while others are more focused on the AI workloads we’re working on. Then there are people working on data science, analyzing the results to make sure we have good statistical rigor, and then there’s me — the pretty face of the company.

Q: You mentioned that the biggest issue with other benchmarking tools is that they are small and synthetic, so they don’t simulate real-world usage. How exactly is Geekbench 6 different and better?

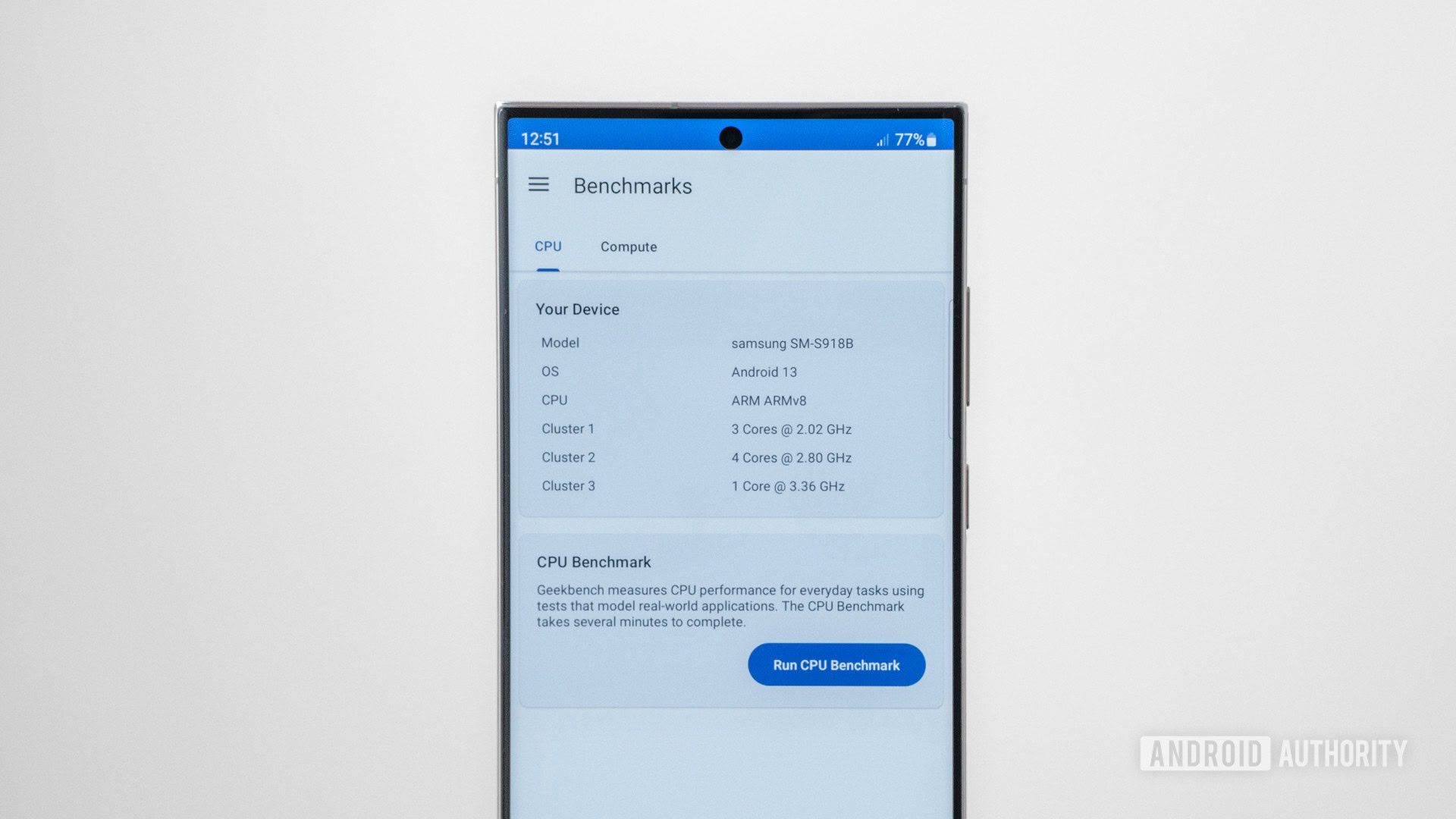

A: We have 15 separate workloads in Geekbench 6 that we use to measure CPU performance. We’ve tried to pick up a variety of different tasks that reflect what we think people use their computers and smartphones for, day in and day out. So we’re really trying to narrow in on what people are going to do with their devices.

We’re focused on things like compression, which is important because when you download apps on your smartphone, Android will unpack and then install them. We have HTML tests because people spend a lot of time in browsers, so it’s an important metric to capture.

We now have a background blur workload, which wasn’t relevant three or four years ago.

There there’s video conferencing that gained traction during the pandemic. We have a background blur workload, which is when your face is visible but the background is blurred so people don’t see your bedroom, for example. That workload wasn’t that relevant three or four years ago but became important because of the pandemic.

We really try to look at things that are CPU intensive and actually matter for the device day in and day out so that we’re not just running small and simple tasks. This is important because we don’t want Geekbench to exist in a vacuum. We don’t want it to be a benchmark that just tells you that this processor is better or worse. We want it to be representative of what people actually do with their devices so they can make a decision on whether it’s time to upgrade.

Q: You mentioned that you’re doing work on AI benchmarking. Can you tell us more about that?

Robert Triggs / Android Authority

A: We had ML (machine learning) benchmarks in Geekbench 5, and we now have new ML benchmarks in Geekbench 6. As I already mentioned, we have a background blur workload that mimics what Zoom is doing, where we’re segmenting an image and saying this part of the image is the foreground, so blur it, and this part is the background, so don’t blur it.

We also have a few other workloads, including a photo library workload that goes through some of the steps you might have when importing photos into a library. Apps like Google Photos, for example, will use ML to tag your images, making it easier for you to find pictures of your baby or cat later on when you’re searching for them.

We also have a separate benchmark that we released back in 2020 that’s still a work in progress. We’re looking at the performance of ML across a huge variety of workloads and taking the traditional models and applications like image recognition, object detection, face detection, and on-device translation. We’re running these on not only CPUs, but on GPUs and NPUs as well to see their performance.

And since a lot of NPUs and modern ML frameworks are making trade-offs for performance versus accuracy, we’re also trying to capture that as a metric. But that’s laser-focused on ML and doesn’t have the same applicability as the Geekbench suite.

Q: Can you tell us a little bit more about Geekbench 6?

Robert Triggs / Android Authority

A: Geekbench 6 is the evolution of Geekbench as a real-world benchmark that measures the performance of the CPU and GPU in the last few versions, for certain things like web browsers, photo applications, and filters for social media. So things people are doing day in and day out.

With Geekbench 6, we’ve tried to further improve the real-world relevance of the benchmark with things like the background blur, which I’ve already mentioned. We also tried to figure out how people are using ML to organize their lives in a certain way, which is why we created the photo library workload I also already mentioned.

Making the data sets bigger and the workloads more relevant and realistic was the big push with Geekbench 6.

We also improved the data sets we use for some of the other workloads. So workloads that were already in Geekbench 5 but are now working on larger data sets in Geekbench 6. An obvious example of this is with mobile devices. There’s a difference between the camera sensors phones had back in 2019 when Geekbench 5 came out and the sensors they have now when you have phones with 48MP and 108MP cameras. So there’s been an explosion in image size, and applications have to deal with that. We’re trying to answer questions like, “how does your phone deal with a 48MP image that your camera generated?” So making the data sets bigger and the workloads more relevant and realistic was the big push with Geekbench 6.

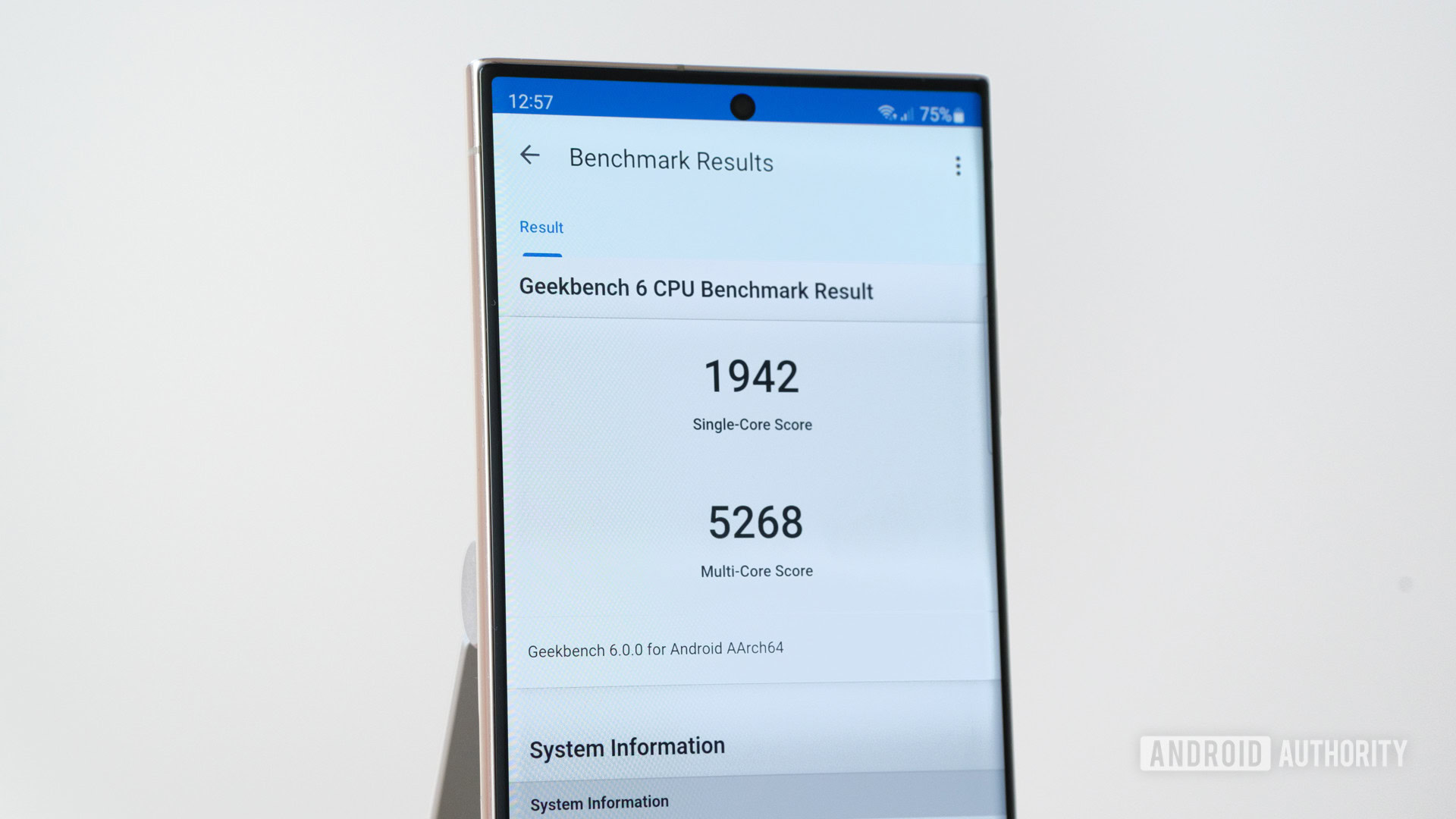

Another thing we did is we completely changed the way we do threading in Geekbench 6. In Geekbench 5, we always spit out the scores into a single-core score and a multi-core score. In Geekbench 6, we still have the same single-core score and the multi-core score, but we’ve actually changed the way we get the multi-core score.

Q: The scores from Geekbench 6 can’t be compared to the scores from Geekbench 5 since it’s a completely different benchmark. What about when it comes to versions like Geekbench 5.1 and 5.2? Are the scores always comparable?

Robert Triggs / Android Authority

A: In the past, 3.0 wasn’t comparable with 3.1, and 4.0 wasn’t comparable with 4.1. While we’re able to catch a lot of issues before the software is released, we do miss things and get feedback from people after the software is already live. We then take that feedback and fix the mistakes within the first month or two.

So whether Geekbench 6.0 will be comparable with 6.1 is hard to say right now, but the following versions like 6.2 and 6.3 should be comparable since we’re mainly adding support for new hardware.

This is just a quick overview of the conversation we had with John Poole from Primate Labs. If you want to learn more, check out the video at the top of the page.

For all the latest Technology News Click Here

For the latest news and updates, follow us on Google News.